Before we can trust the projected models of sea level rise then it is reasonable to demand that these models can match the record of global mean sea level rise. Gregory et al. (2012) writes: “_Confidence in projections of global-mean sea level rise (GMSLR) depends on an ability to account for GMSLR during the twentieth century”.

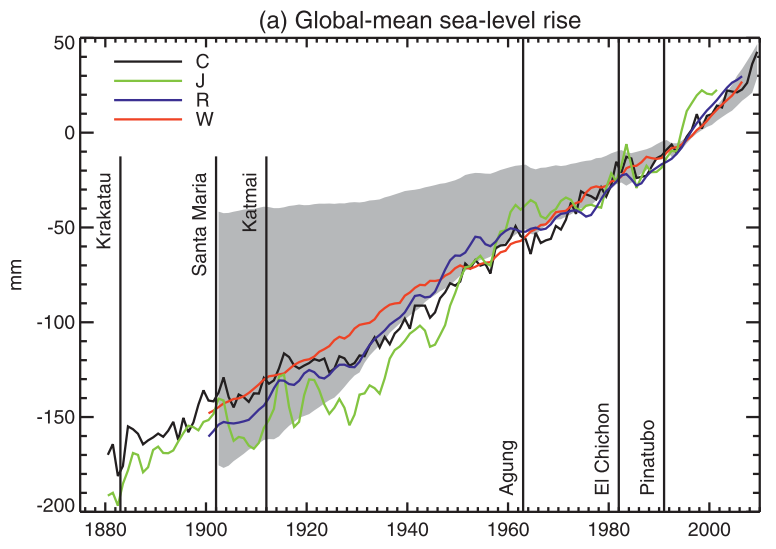

Gregory et al. (2012) investigates this systematically. They select a set of different models for each of the individual SLR contributors. They then try all the different combinations of the selected models to see how they compare to global mean sea level. This is shown in this graph

Figure 1: Comparison of time series of annual-mean global mean sea level rise from four analyses of tide gauge data (lines) with the range of the 144 synthetic time series (gray shading). Each of the synthetic time series is the sum of a different combination of thermal expansion, glacier, Greenland ice sheet, groundwater, and reservoir time series.

Figure 1: Comparison of time series of annual-mean global mean sea level rise from four analyses of tide gauge data (lines) with the range of the 144 synthetic time series (gray shading). Each of the synthetic time series is the sum of a different combination of thermal expansion, glacier, Greenland ice sheet, groundwater, and reservoir time series.

This graph shows that it is only possible to close the sea level budget if you cherry-pick the most sensitive models. In general we see that the whole is greater than the sum of the parts. This is not how it is framed in the paper where they instead argue that they can satisfactorily account for the GMSLR. Personally this necessity to cherry pick does not reassure me.

Motive

A severe problem with the Gregory et al. paper is that they claim that their analysis has implications for semi-empirical models. This assertion is unsupported in their study. There is no analysis of semi-empirical models what so ever, and they show no evidence for this statement. This hints to me that there is an agenda. They want to convince us that we should have confidence in their preferred type of models but not in alternatives (semi-empirical models). Frankly, I think they succeeded in demonstrating in pretty much the opposite.

Another paper / same authors

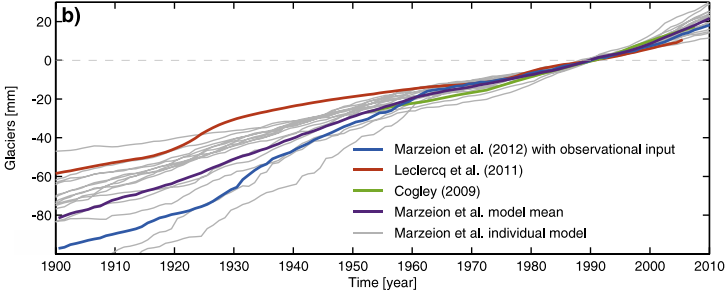

Church et al. 2013 also tries to argue that we can have confidence in process models of sea level rise. The first thing i notice is that the author team has a great deal of overlap with Gregory et al. (2012). In this new paper they repeat the exercise of testing whether they can close the 20th century sea level budget. The difference from the Gregory et al. study is that in this case they pick one particular set of models which roughly close the budget. The problem is that they select models that disagree with data when you look in detail: e.g. they pick a version of the glacier model with a much greater historical sea level contribution than our best data based estimate from Leclercq. Is that a successful validation of the models against data?

Figure 2: Blue is the modelled glacier contribution used to close the budget in Church et al. (2013), and red is a data based estimate. Clearly the chosen model has a much larger contribution than data suggests.

Both papers also add a constant long term ice sheet equilibration term of 0-0.2 mm/yr. I do not want to discuss this term in detail here, but I just note that I think this estimate needs to be updated with more recent modelling. I am also concerned with whether this term is being counted twice in the budget.

References:

Gregory et al. 2012, Twentieth-Century Global-Mean Sea Level Rise: Is the Whole Greater than the Sum of the Parts?, http://dx.doi.org/10.1175/JCLI-D-12-00319.1

Church et al. 2013, Evaluating the ability of process based models to project sea-level change, ERL, http://iopscience.iop.org/1748-9326/8/1/014051

Rahmstorf at RealClimate: http://www.realclimate.org/index.php/archives/2013/01/sea-level-rise-where-we-stand-at-the-start-of-2013/